Week 19/31: Cloud Computing for Data Engineering Interviews

Understanding Cloud Computing and Infrastructure as Code (IaC) and their roles in Data Engineering

In the last decade, cloud computing has reshaped Data Engineering.

For Data Engineers, cloud computing is no longer optional.

Cloud services are the foundation of modern data pipelines. From storing the raw data to orchestrating sophisticated transformation workflows, the cloud offers services and tools designed to support every stage of the data lifecycle.

Almost all companies today, from early-stage start-ups to large enterprises, are running their services and data platforms on the cloud. As a result, employers actively seek candidates with hands-on experience with at least one major cloud provider such as AWS, Azure, or Google Cloud Platform (GCP).

Interviewers are no longer just looking for traditional ETL or SQL skills; they want engineers who can design scalable data systems, build resilient pipelines, and manage cloud resources efficiently. These abilities have become fundamental for standing out in both entry-level and senior-level interviews.

In this post, we will discuss:

What is Cloud Computing?

The most common cloud services and platforms that Data Engineers work with.

The must-know tools that are frequently discussed in technical interviews.

Infrastructure as Code (IaC) and Terraform.

Cloud best practices for Data Engineers.

What is Cloud Computing?

At its core, cloud computing is the delivery of computing services such as storage, processing power, databases, networking, and software over the internet, instead of relying on local servers or personal machines.

Rather than buying and maintaining physical hardware, companies can rent the resources they need from cloud providers like Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP). These resources are available on demand, can scale almost infinitely, and are charged based on usage, offering a flexible and cost-efficient alternative to traditional infrastructure.

In the context of Data Engineering, cloud computing allows teams to:

Store structured and unstructured data.

Run data processing workloads at scale.

Build automated, reliable, and distributed data pipelines.

And deploy solutions quickly across different regions of the world.

In interviews, candidates are often asked about their experience with specific services, their ability to design cloud-native architectures, and their familiarity with data engineering concepts in the cloud.

Note: As the co-authors of Pipeline To Insights, we have participated in 50+ Data Engineering interviews total, and in every single one, at least one or two questions were related to cloud services.

Whether it was a general question like "Are you familiar with [AWS / Azure / GCP]?" or a more practical scenario like "How would you design [X] using [Y cloud service]?", cloud expertise consistently came up.

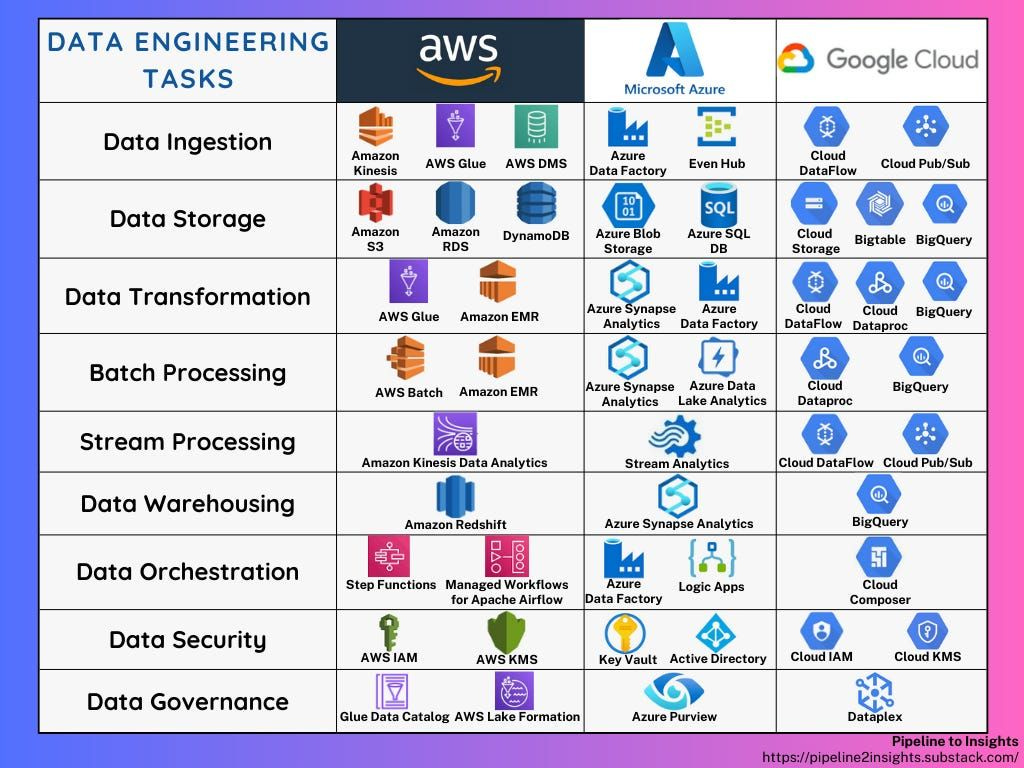

This is a list of the Data Engineering tasks and the services from the three biggest cloud providers offered for them.

In the next section, we will focus on some of the most common tools.