Data Ingestion: Batch vs. Stream, Which Strategy is Right for You?

Choosing Between Batch and Stream Processing for Optimal Data Pipelines

Source systems and data ingestion represent the biggest bottlenecks in the data engineering life cycle, as Joe Reis noted in his Data Engineering course.

By working directly with source system owners to understand how their systems operate, how they generate data, and how that data may evolve over time, you'll gain insights into how these changes can affect the downstream systems you build. This approach positions you to avoid common pitfalls during the data ingestion phase.

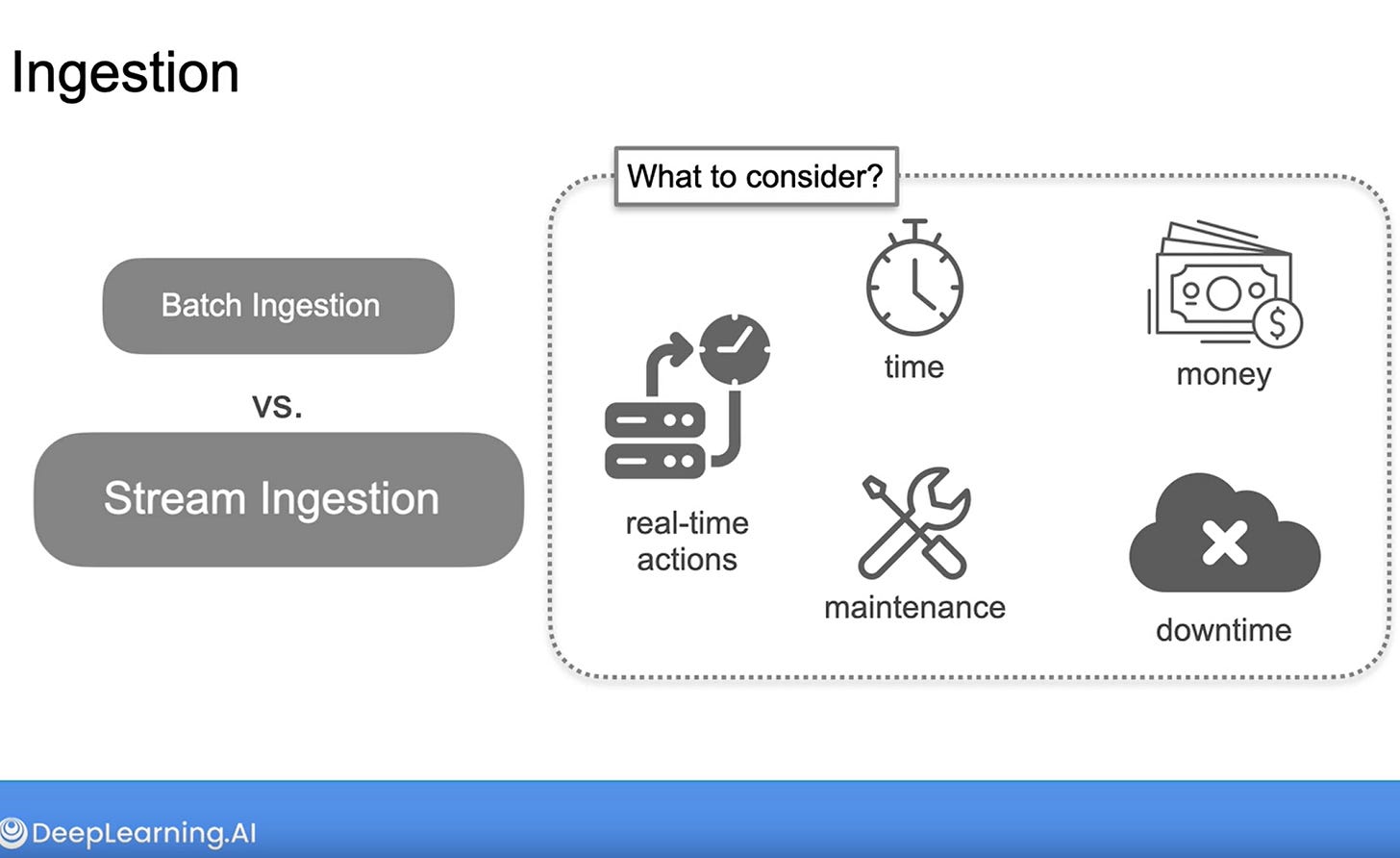

In Data Engineering, there are two major data ingestion patterns:

Visual from DeepLearning.AI (”https://www.deeplearning.ai/courses/data-engineering/”)

Batch Processing

Definition: Collects and processes data at set intervals, such as hourly or daily, rather than continuously.

Advantages:

Handles large volumes of data at once, typically based on a time interval or when data accumulates to a certain threshold.

Historically the go-to method for data ingestion; continues to be practical and widely used.

Use Cases:

Effective when immediate data isn't essential, such as in analytics and machine learning tasks that can work with delayed data.

Stream Processing

Definition: Collects data continuously, capturing it almost immediately after it's produced.

Advantages:

Views data as a continuous flow, handling events as they happen, think website clicks or live sensor readings.

Data becomes available to downstream systems in near real-time, often within seconds of generation.

Use Cases:

Ideal for scenarios requiring prompt responses, like real-time monitoring or live updates, where instant data action is crucial.

Batch and Streaming Together

It’s important to understand that batch and streaming often work together in a data pipeline. For instance, while machine learning models are typically trained on batch data, that same data might have been ingested through streaming to meet other needs, like real-time anomaly detection.

Pure streaming pipelines are rare, most systems blend both approaches. The key is deciding where to use batch and where to use streaming, depending on your specific requirements. You'll often define clear boundaries between them based on the goals of your system.

What to Know Before Transitioning to Streaming

As streaming tools become more widespread, streaming ingestion has become easier and more popular. However, it’s important to have a clear business case before choosing a streaming approach. Joe advises adopting streaming only after carefully considering the trade-offs compared to batch processing.

Key questions to ask:

Cost: will streaming cost more in terms of time, money, maintenance, and downtime than batch processing?💵

Value: What advantages will real-time data provide over batch data?

Impact: How will choosing streaming over batch (or vice versa) affect the rest of your data pipeline?🫠

Example: Using Apache Spark to Support Both Strategies

An example of blending both batch and streaming approaches is through a tool like Apache Spark. Its flexibility allows you to implement the most suitable ingestion strategy for your needs:

Batch Processing with Spark:

Spark's core API efficiently handles large datasets on a schedule.

Ideal for data transformations or analytics that don't require immediate data.

Stream Processing with Spark:

Spark Structured Streaming processes data continuously.

Enables immediate insights from live data sources like Kafka.

Suitable for real-time applications that need instant responses.

By using a tool that supports both strategies, you can adapt your data pipeline to meet varying requirements without switching between different platforms.

We recently published a beginner-friendly Spark tutorial, check it out if you're interested!

Getting Started with Apache Spark: Unlocking Big Data Processing

Apache Spark is a fast and general-purpose cluster-computing system that has become a cornerstone in the big data ecosystem.

If you'd like to learn more about data Engineering please check:

[Data Engineering Professional course] on Coursera/Deeplearning.ai.

Join The Conversation!

We hope you found this post helpful. If you have any questions, comments, or experiences you'd like to share, we'd love to hear from you!

If you're interested in more posts like this, be sure to subscribe for upcoming tutorials, deep dives, and insights. Let's learn and grow together in the exciting world of data engineering!

I have a question but outside of batch or streaming.

At what point should a company start implementing an incremental load?