Docker for Data Engineers

From introduction to implementation, learn how to build reliable, reproducible data pipelines using Docker

As a data engineer, we might find ourselves in different scenarios. Maybe we're part of a large team where others handle infrastructure, or perhaps we're the only data engineer managing the entire data platform. In either case, it's crucial to understand the tools and dependencies that support our development workflows.

When we're developing data pipelines, whether with tools like Airflow1, dbt2, or custom scripts, our code often depends on a specific set of configuration files, library versions, and databases. Packaging all these pieces together and ensuring they behave the same way across different environments could be challenging.

We’ve likely run into problems like:

Confusing setup instructions that break on a colleague’s laptop.

Struggles replicating production bugs locally.

This is where Docker becomes invaluable.

Docker helps us create reproducible, isolated environments. For data engineers, this means we can:

Run the same code with the same results across development, testing, and production.

Eliminate dependency conflicts.

Easily share and onboard team members with a standard setup.

Spin up tools like Airflow, Postgres, and dbt with minimal effort.

In this post, we’ll explore:

Virtual Machines vs Containers: Understanding the Key Differences

What is Docker?

Docker Components: Dockerfile, Images, Docker Compose, registries and volumes.

Docker Workflow: How everything fits together in practice.

Introduction to Kubernetes

Hands-On Project: Build a simple data pipeline using dbt and PostgreSQL inside Docker, with a GitHub repo3.

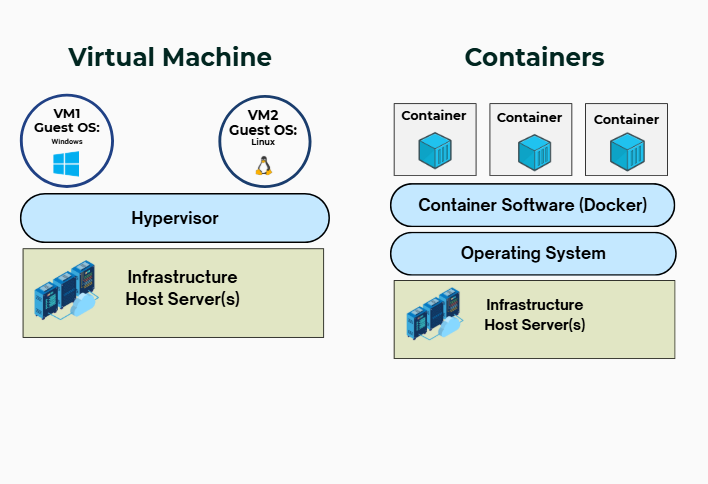

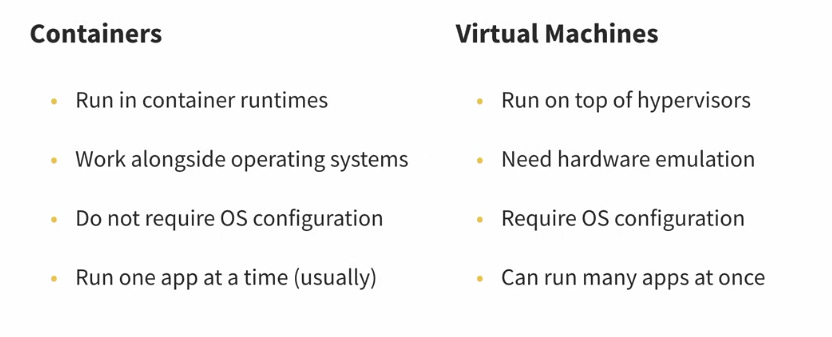

Virtual machine vers Container

Containers and virtual machines both help run data applications in isolated environments, but they work very differently. Virtual machines (VMs) emulate entire computers using a hypervisor4 (as a virtual machine monitor) that simulates hardware like CPUs, memory, and disks. Each VM includes its own full operating system, which makes them heavier and slower to start. This setup offers strong isolation and flexibility, allowing multiple data apps to run inside one VM, but it also consumes more resources and disk space.

Containers, on the other hand, share the host system’s operating system and run on container runtimes. They don’t need to boot a full OS, so they start up very quickly and use much less space. Containers are lightweight and usually designed to run a single app each, making them highly efficient for deploying many apps at once.

Docker

Before Docker, there were other ways to isolate applications, but they were harder to use. It started with chroot in the 1980s, which let programs think they had their own folder, even though they were limited to just part of the system. Later, systems like BSD Jails5, Solaris Zones6, and Linux Containers (LXC)7 gave more control; they could isolate not just files, but also processes and networks. However, setting them up was complex and not very friendly for developers.

Docker made things much easier. It lets us package everything our data app needs into a container and run it anywhere with just a few simple commands. It also makes sharing containers easy through tools like Docker Hub.

"Docker is like cooking our favourite family recipe."

At home, it tastes perfect. But it's different at a friend’s place, maybe the salt, stove, or pans change the outcome.

Docker solves this for software. It packages everything our app needs: code, tools, and settings, so it runs exactly the same anywhere. Like bringing our own kitchen wherever we go.

Docker Components

As a data engineer, we're often building pipelines, managing databases, and orchestrating tools. Understanding Docker’s core components gives us the power to build portable, reproducible, and scalable environments, essential for real-world data projects.

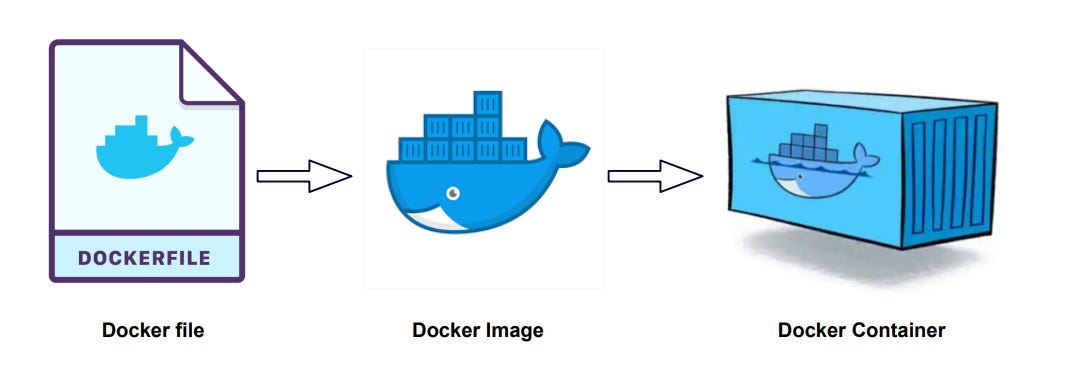

a. Image

A Docker image is like a recipe or blueprint for running a data application. It defines everything needed to set up and run our data app, from the operating system and software packages to configuration files and commands. We can build our own image or use an existing one, then add custom layers, such as installing dbt, setting up environment variables, or including specific Python packages, to create a fully reproducible and portable setup.

Images are created using a Dockerfile, which lists all the steps and tools required to build the environment. Think of it as a list of ingredients and instructions: technologies our data app needs, the runtime it depends on, and how to launch it. Once built, the image can be shared or reused anywhere, making it easy to reproduce the same setup across different machines or environments.

This Dockerfile from the FreeCodeCamp Data Engineering Course creates a lightweight Docker image designed to run a Python ELT script that interacts with a PostgreSQL database.

Here's a breakdown of what each line does:

FROM python:3.8-slim

Uses a minimal Python 3.8 base image.

The "slim" tag means it's smaller and faster, with fewer preinstalled packages.

RUN apt-get update && apt-get install -y postgresql-client-15

Installs the PostgreSQL client tools (version 15) so the Python script can connect to and run commands on a Postgres database.

psqland other CLI tools become available inside the container.

COPY elt_script.py .

Copies our local

elt_script.pyfile into the container's working directory.

CMD ["python", "elt_script.py"]

Sets the default command to run the ELT script when the container starts.

b. Container

A container is like a ready-to-serve dish made from the recipe (image).

It’s a running version of an image.

We can start, stop, delete, or run multiple containers at once.

Each container runs in its own little box, so it doesn’t affect our computer or other containers.

b. Registry

A Docker registry is a centralised place where Docker images are stored and managed. The most widely used registry is Docker Hub, which acts like a marketplace for container images. It allows users to upload (push) their own images and download (pull) others, including official and community-contributed ones. Public images are freely accessible, while private repositories offer more control. When we build a Docker image, we can assign it a name and tag, then push it to Docker Hub for reuse or sharing.

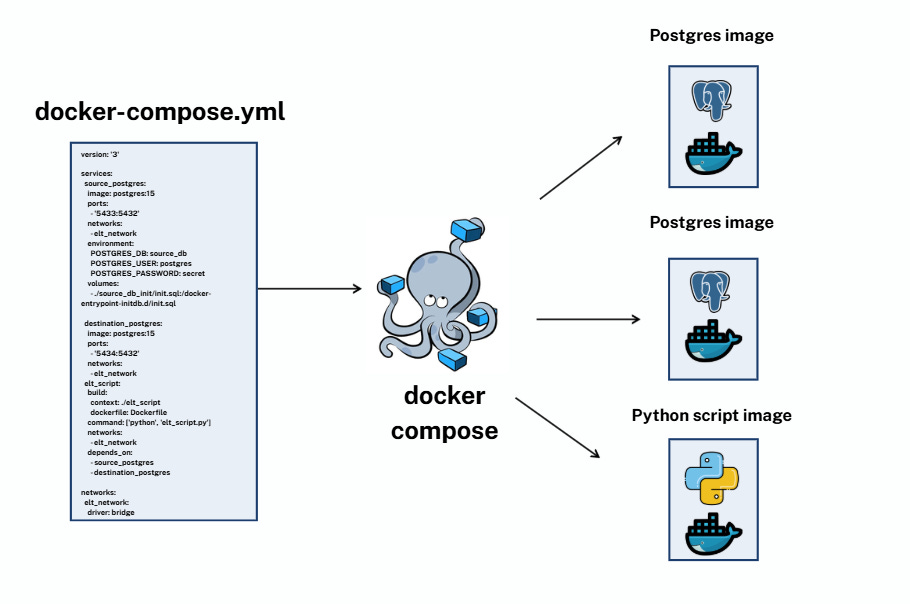

c. Docker compose

Data engineers, we often work with complex workflows that involve multiple components, a PostgreSQL database, an ETL/ELT pipeline, a REST API, maybe even dbt for transformations. Managing all of these in a single container would be messy and inflexible. Instead, we break them into microservices: each task or service runs in its own isolated container, making it easier to develop, monitor, and debug each piece independently.

Docker Compose lets us define and manage this entire setup in one place. Using a docker-compose.yml file, we can declare all the services our pipeline needs, including dependencies, ports, volumes, and networking, and bring them up together with a single command. This ensures that our database, ETL scripts, and supporting tools all run consistently in development, testing, or production, and talk to each other seamlessly, just like they would in a real data platform.

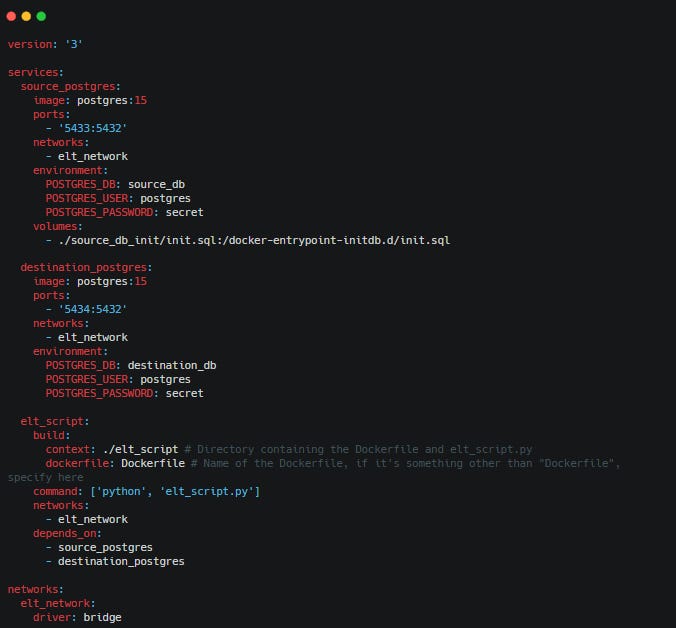

This Docker Compose file sets up a basic ELT pipeline with three services:

source_postgres: PostgreSQL database (port 5433) with initial data loaded from

init.sql.destination_postgres: Another PostgreSQL database (port 5434) to store transformed data.

elt_script: A Python script that connects to both databases to perform the ELT process.

All services run on the same Docker network and start in the right order using depends_on.

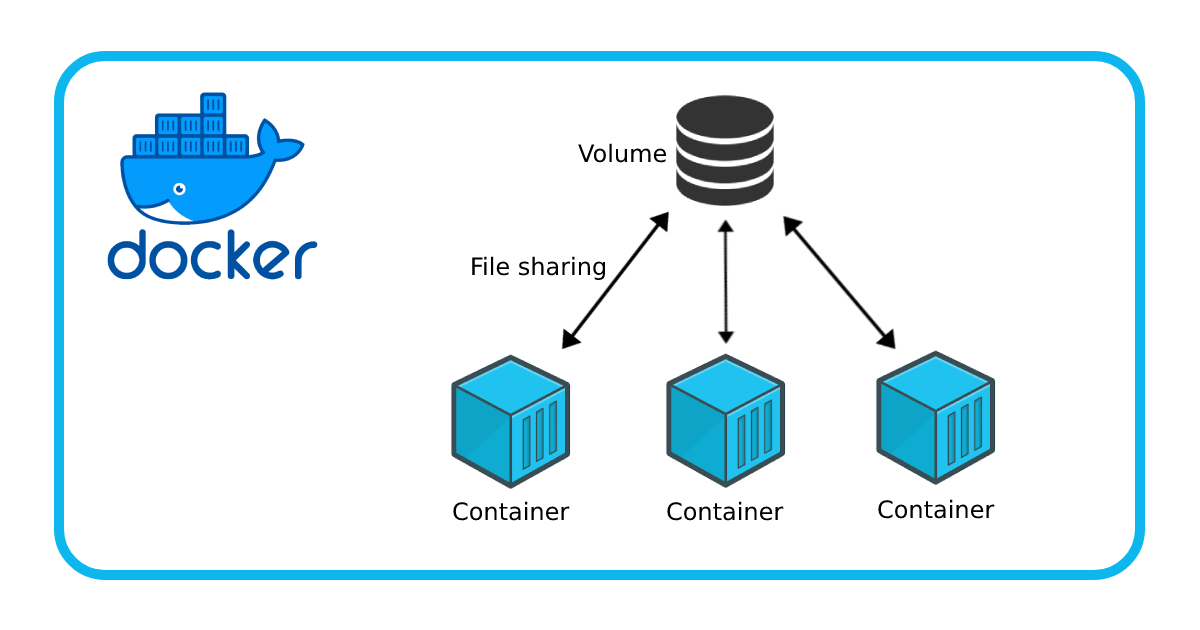

d. Volumes

By default, when a Docker container stops or is removed, everything inside it, including any data, is lost. This isn’t ideal for data engineering workflows where we need to persist data across runs, like saving database contents, raw files, or pipeline outputs. That’s where Docker volumes come in. A volume is a special directory on our machine (or in the cloud) that’s mounted into a container, allowing us to store data independently of the container’s lifecycle.

For example, if we're running a PostgreSQL container, using a volume ensures that our database data isn't lost when the container restarts. Volumes also make it easy to share data between services, such as between an ETL job and a reporting tool. They help maintain state, support reproducibility, and keep our data workflows reliable, making them essential in any real-world data engineering setup.

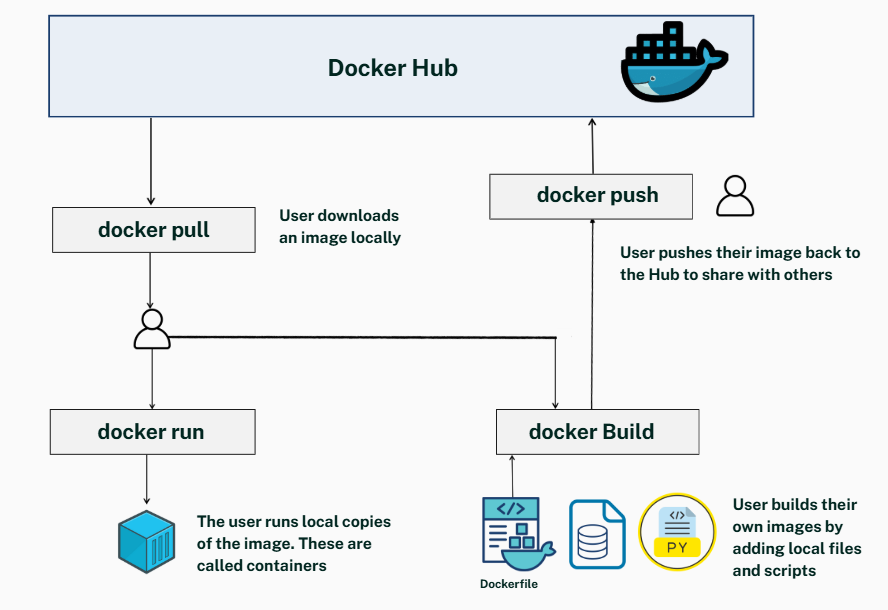

Docker workflow

The diagram below shows a typical Docker workflow, from finding images to creating our own and sharing them with others:

Search for an Image on Docker Hub

Docker Hub is where we look for pre-built images of the tools we need—like Airflow, Postgres, or dbt, already packaged and ready to run.Pull the Image (Download it)

Once we found it, we Usedocker pullto download the image to our computer.Run the Image (Start a Container)

Now that the image is downloaded, we can usedocker runto start it up.

This running version of the image is called a container. It’s like running an app in its own little box.Build our own image

If we’re building a pipeline (like with dbt), we can create our own Docker image by writing a Dockerfile, a text file that tells Docker how to set it up using our code and files.Then, we run

docker buildto create the custom image.Push our image to Docker Hub

When our image is ready and working, we can share it with others usingdocker push.

This uploads our image to Docker Hub so teammates or others can use it.Repeat and collaborate

We can pull someone else’s image, improve or change it, and push our version back.

This makes Docker a powerful tool for collaboration and consistency in projects.

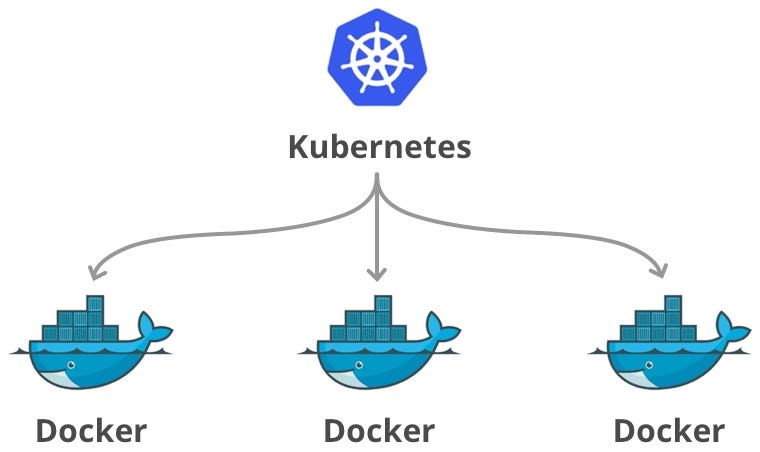

Container orchestrator:

Docker helps run containers easily on one computer, but when we have hundreds or thousands of containers across many computers, it gets complicated. Docker alone can’t easily move containers between machines, scale them automatically, or handle secure networking and traffic routing well.

That’s where container orchestrators come in. They help manage, scale, and connect containers across many machines smoothly. Kubernetes is the most popular container orchestrator today. It can run containers on many machines as if they were on one, scale container groups up or down based on demand, securely route traffic, and is very flexible with lots of tools to extend it.

In short, if Docker is like packing meals in boxes, Kubernetes is like the delivery service that manages and delivers thousands of those meal boxes efficiently worldwide.

Data Transformation Pipeline: dbt + PostgreSQL + Docker

This project is a data transformation pipeline using dbt and PostgreSQL, packaged with Docker and Docker Compose.

Pipeline Overview:

Transforms raw customer and order CSV files into clean, analytics-ready tables.

Uses dbt to:

Stage and clean raw data (e.g., rename columns, validate emails).

Build final dimensional models (e.g., categorise cities, calculate age).

Follows the pattern: raw → staging → final models.

Why Docker:

We build a custom dbt image with the required packages and configs.

We use the official PostgreSQL image from Docker Hub.

Docker Compose runs both together, handling setup and networking.

This makes the pipeline easy to run anywhere with no local setup.

Prerequisites

Make sure Docker and Docker Compose are installed on your machine. You can find installation instructions on the official Docker documentation.8

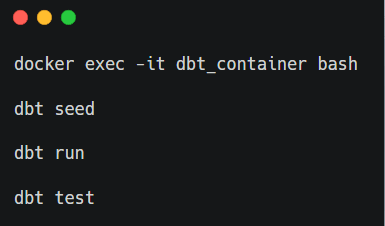

How to Run

Start the services:

docker-compose up --build

Load data and run transformations:

Check the database:

docker exec -it dbt_postgres psql -U dbt_user -d dbt_demo

That's it! The pipeline will load your CSV data, transform it through staging and marts models, and you can query the results in PostgreSQL.

Conclusion

Whether we're working alone or in a team, Docker helps us create data pipelines that are consistent, portable, and easier to manage. It solves common problems like environment setup, dependency conflicts, and onboarding challenges. By containerising data engineering tools, we can spend less time troubleshooting and more time focusing on delivering value.

From learning the basics to building our first data transformation pipeline, we now have a solid foundation to use Docker effectively in our data engineering work. Start experimenting, and soon Docker will become an essential part of your toolkit.

Keen to learn more about Docker essentials for data engineers? Check this post9 by

.Preparing for data engineering interviews? Check out our data engineering preparation guide here..

We Value Your Feedback

If you have any feedback, suggestions, or additional topics you’d like us to cover, please share them with us. We’d love to hear from you!

https://airflow.apache.org/

https://www.getdbt.com/product/what-is-dbt

https://github.com/pipelinetoinsights/dbt-postgres-docker.git

https://www.vmware.com/topics/hypervisor

https://docs.freebsd.org/en/books/handbook/jails/

https://docs.oracle.com/cd/E19044-01/sol.containers/817-1592/zones.intro-1/index.html

https://linuxcontainers.org/

https://docs.docker.com/compose/install/

https://www.datagibberish.com/p/docker-essentials

Absolutely amazing article,