Infrastructure as Code for Data Engineers

What Data Engineers need to know about Infrastructure as Code (IaC) with a simple Terraform Hands-On example

As Data Engineers, we might use cloud services to store data, move data around, run virtual machines, and build other important tools.

It’s tempting to jump straight into a cloud provider’s UI, create an account, and start building. This approach works quickly and well for personal exploration or small, one-off projects. But what happens when we're building for a company, especially in a fast-moving environment?

In the real world, things get more complex:

Our infrastructure needs grow.

We have to make changes as our team scales.

We might need to recreate environments for dev, test, and prod.

And doing it all manually?

We find ourselves:

Clicking around in the UI to make changes.

Trying to keep everyone updated on what we’ve done.

Having no version control over infrastructure changes.

Onboarding new teammates who struggle to understand what resources exist and how they’re configured.

Suddenly, with dozens of environments, hundreds of resources, and multiple teams, it becomes unmanageable and error-prone.

That’s where Infrastructure as Code can be beneficial.

Infrastructure as Code treats our infrastructure just like software.

It brings software engineering practices, like version control, automation, and code reuse, into the world of cloud infrastructure.

With IaC, our infrastructure becomes:

Programmable: Define it in code.

Version-controlled: Track every change.

Repeatable: Reuse and redeploy environments consistently.

Instead of manually creating resources, we can use deployment tools like:

Terraform.

AWS CloudFormation

Google Cloud Deployment Manager and more

These tools help us maintain a clean, well-documented, and scalable infrastructure.

In This post, we cover:

What is Infrastructure as Code (IaC)?

Five main types of IaC tools.

Why Data Engineers Should Use IaC?

What is Terraform?

A simple implementation of Terraform on Azure.

What Is Infrastructure as Code?

In the early days of IT, setting up a server was a manual process.

Engineers had to:

Unbox and plug in physical servers.

Log in to each one individually via the command line.

Manually install and configure the required software.

This approach worked fine for one or two servers, but quickly became slow, error-prone, and impossible to scale when organisations needed dozens or hundreds of machines.

By the late 1990s and early 2000s, tools like CFEngine, and later Puppet, Chef, SaltStack, and Ansible emerged. These allowed engineers to write code describing how a server should be configured, automating repetitive tasks and ensuring consistency across environments.

Then came the cloud era. With AWS, Azure, and Google Cloud replacing on-premises hardware, the complexity shifted from racking physical servers to managing a huge variety of virtual infrastructure: virtual machines, storage, networks, containers, security rules, and more.

Around 2009, alongside the rise of DevOps, the term Infrastructure as Code became popular. IaC meant using code, not manual clicks in a UI, to define and manage infrastructure. This approach brought the same benefits that coding brought to application development: speed, repeatability, version control, and reduced human error.

For example, we could simply write:

“Create a server with 8 GB of RAM and install a database.”

Today, IaC is a cornerstone of modern infrastructure management, enabling teams to deploy entire environments in minutes, maintain consistency across regions, and roll back changes with confidence.

By adopting IaC, we can significantly improve deployment speed, reduce the risk of human error, and ensure that infrastructure resources are reproducible and maintainable over time. As the complexity of systems grows, IaC provides the tools necessary to manage these environments efficiently.

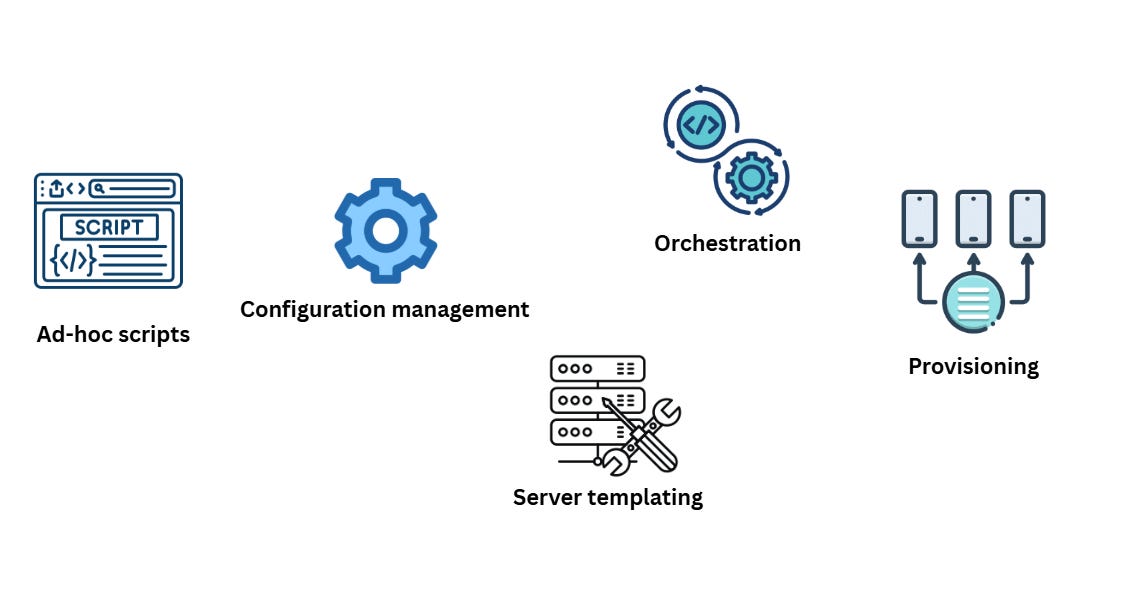

Five Main Types of IaC Tools

In the book Terraform: Up and Running: Writing Infrastructure as Code1 by Yevgeniy Brikman2, five main types of Infrastructure as Code (IaC) tools are outlined. As a data engineer, we don’t need to master all of them, but it’s useful to understand what each type does:

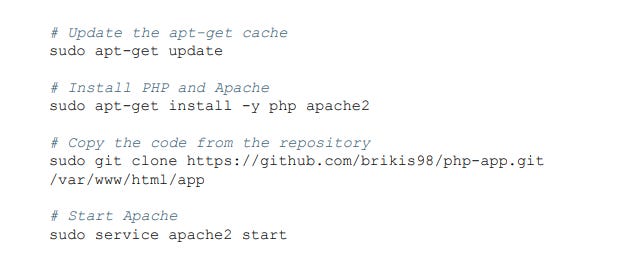

1. Ad-hoc scripts:

This is the simplest way to automate tasks: write a script in a language like Bash3 or Python to do what we’d normally do by hand.

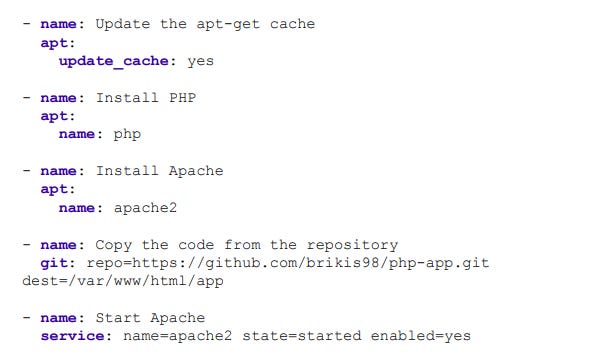

2. Configuration management tools

Tools like Chef, Puppet, and Ansible are designed to install and manage software on existing servers. Instead of writing custom scripts, we define what each server should look like.

Here’s an example using Ansible to set up a web server:

3. Server templating tools

Server templating tools like Docker4 and Vagrant let us create a reusable image of a server. This image includes the OS, software, files, and settings, like a snapshot of a fully set-up machine. Instead of setting up each server from scratch, we create one image and use other tools to install it on many servers.

If you're keen to learn more about Docker, check out our popular post here:

4. Orchestration tools

Server templating tools like Docker help create containers and VMs, but what happens when we need to run them at scale?

In larger, more complex systems where many containers are running, we often need to:

Deploy the containers efficiently.

Monitor health and replace failed containers (auto-healing).

Scale up or down based on load (auto-scaling).

Balance traffic between containers (load balancing).

Let containers talk to each other (service discovery).

That’s where orchestration tools come in, like:

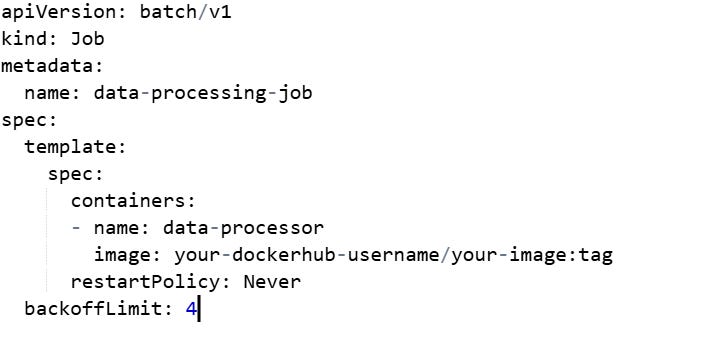

Below is a Kubernetes YAML that defines a Job to run a containerised task with limited retries:

5. Provisioning tools

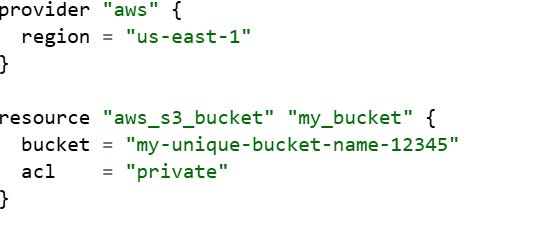

Provisioning tools like Terraform, CloudFormation8, and Pulumi9 are used to create cloud infrastructure, servers, databases, networks, and more.

Unlike other tools that configure what runs on a server, provisioning tools build the servers and resources themselves.

For example, the below config sets the AWS region and creates a private S3 bucket

We will take a closer look at Terraform, one of the most widely used IaC tools among data teams, later in this post.

Why Data Engineers Should Use IaC?

Adopting IaC takes some upfront effort, but for modern data engineering, the payoff is massive. But why?

1. Speed and Reliability

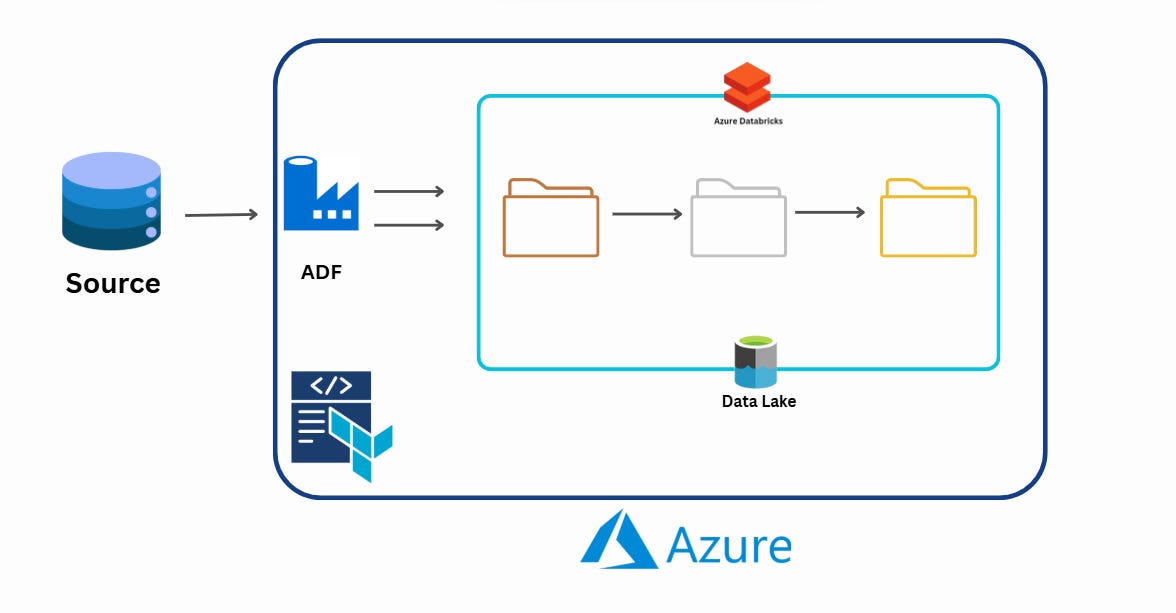

Provision entire data environments, data warehouses, lakehouse clusters, Kafka topics, S3 buckets, Databricks workspaces, in minutes, not days. IaC ensures every environment is configured exactly the same, reducing deployment drift and breaking changes in ETL/ELT workflows.

2. Self-Service Environments

No more waiting on DevOps to spin up a Redshift cluster or create an Azure Data Lake container. With IaC, we can deploy infrastructure with a single command or as part of an automated CI/CD pipeline.

3. Living Documentation for Data Platforms

Our data stack configuration lives in versioned code, not someone’s head. Anyone can inspect the repo to see how storage, compute, and networking are set up across environments.

4. Version Control and Rollbacks

Track every change to infra definitions in Git. If a Spark job starts failing after a cluster config change, we can roll back instantly and know exactly what was modified.

5. Validation and Testing Before Production

We can run automated checks to validate schema changes, security settings, and resource policies before anything touches production data.

6. Reusable Data Infrastructure Modules

Package Terraform modules or CloudFormation templates for common patterns, like a secure S3 bucket with lifecycle policies, or a preconfigured Databricks cluster, so new projects start with proven, compliant building blocks.

7. Faster Incident Recovery

If a data pipeline or environment fails, IaC lets us recreate the entire infrastructure stack exactly as it was, reducing MTTR (Mean Time to Recovery) and avoiding costly downtime.

8. Built-in Governance, Security, and Compliance

Apply encryption, IAM roles, and network rules as code. Enforce compliance (GDPR, SOC 2, HIPAA) through automated policy checks before deployment.

9. Happier Data Teams

Less manual provisioning, fewer “it works on my machine” issues, and more time to focus on building high-value data products instead of firefighting infrastructure problems.

What is Terraform?

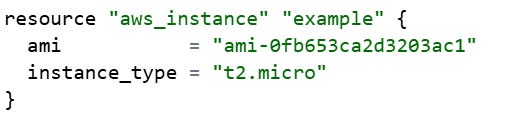

Terraform is an Infrastructure as Code (IaC) tool developed by HashiCorp10, used by thousands of teams worldwide to manage and automate infrastructure. Written in Go11 and distributed as a single binary, it uses a simple declarative language to define infrastructure, making it easy to deploy and manage across a wide range of platforms, public clouds like AWS, Azure, and GCP, as well as private clouds and virtualisation platforms such as OpenStack12 and VMware13.

Terraform configurations are written in HashiCorp Configuration Language (HCL)14 and stored in files with the .tf extension. These human-readable files define our infrastructure resources using a simple, declarative syntax. For example, we can create an AWS EC2 instance :

Note: Terraform was originally licensed under the Mozilla Public License (MPL), making it truly open source.

In August 2023, HashiCorp switched Terraform’s license to the Business Source License (BSL).

BSL = “source available”, not OSI-approved open source.

We can still see and use the code for free in most cases, but we can’t use it to offer a competing paid service.

The last truly open source version is v1.5.x.

OpenTofu15 is the community fork of Terraform that remains under an open source license.

Terraform comes in three editions: Community Edition, HCP Terraform (hosted SaaS), and Terraform Enterprise (self-hosted). For more information, refer to the official documentation here16.

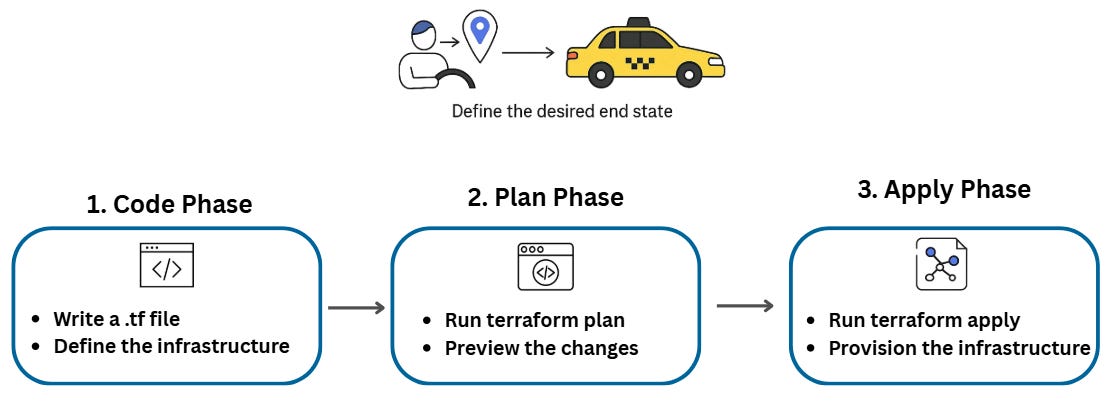

Terraform Workflow Overview

Think of infrastructure setup like getting from Point A to Point B.

Imperative approach: We give exact turn-by-turn instructions; every step is manual and must be correct.

Declarative approach: We just say where you want to go, and the system figures out the best route.

Terraform uses the declarative approach. We describe our desired end state, and Terraform works out the steps to get there. This makes it powerful for managing infrastructure, especially for data platforms where speed, consistency, and automation matter.

Example Scenario:

We need to deploy:

Storage (object storage for raw data).

Ingestion (an ingestion service to ingest data).

Database (structured data store).

Key vault (Secure storage for secrets, keys, and certificates).

Right now, nothing exists; this is our current state. Our desired state is to have all three resources up and running.

Terraform’s 3-Step Workflow

1. Code Phase: Define the infrastructure

Write a

.tffile describing our resources:Azure Data Lake.

Azure Data Factory.

Azure PostgreSQL database.

Key Vault.

This file is our blueprint.

2. Plan Phase: Preview the changes

Run

terraform planto compare our current state (empty) with our desired state (defined in code).Terraform shows exactly what it will create, change, or destroy, before it touches anything.

3. Apply Phase: Build it

Run

terraform applyto provision the resources.Terraform talks to the cloud provider APIs and creates everything. It also outputs useful information like connection strings or dashboards.

Result: We go from zero to a fully working environment with repeatable, version-controlled, and automated deployments, perfect for scaling and maintaining data infrastructure.

We’ll go through hands-on examples later in this post.

Terraform Modules and Providers

Terraform is modular and pluggable by design, which gives it flexibility and scalability.

Modules allow us to group related resources together. Each module can accept input variables and return output values, making it reusable and easy to plug into larger systems.

Modules also define providers, which are essential for connecting Terraform to our infrastructure.

Providers allow Terraform to interact with:

Infrastructure as a Service (IaaS) platforms like AWS, Azure, GCP

Platform as a Service (PaaS) tools like Cloud Foundry

Even SaaS solutions like Cloudflare

So, while Terraform started with infrastructure automation, its reach now spans platforms and SaaS as well.

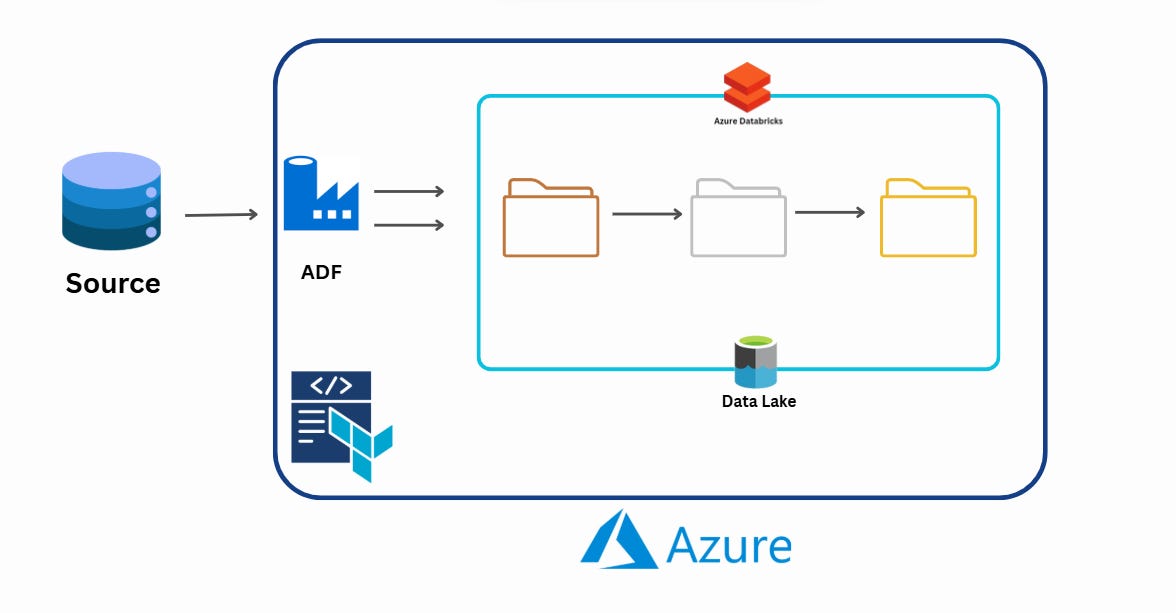

DevOps-First Approach

One of the biggest advantages of using Terraform is that it promotes a DevOps-first mindset.

Let’s build on our earlier example.

Assume we already have ADLS, ADF, a database, and a Key Vault set up. Now, we want to add Azure Databricks.

We update our .tf file to define the new Azure Databricks resource. When we run terraform plan, Terraform detects that:

Those existing services are already in place.

Only Azure Databricks needs to be created.

After reviewing the plan, we apply the changes, and Terraform updates our environment accordingly. This process helps prevent configuration drift, where the actual infrastructure state diverges from the code.

Because everything is defined and version-controlled as code, it becomes easy to:

Track changes

Reproduce environments reliably

Ensure consistency across Development, Test, and Production stages

By simply swapping out environment-specific variables, Terraform can recreate the exact same infrastructure in different stages.

Terraform in Action

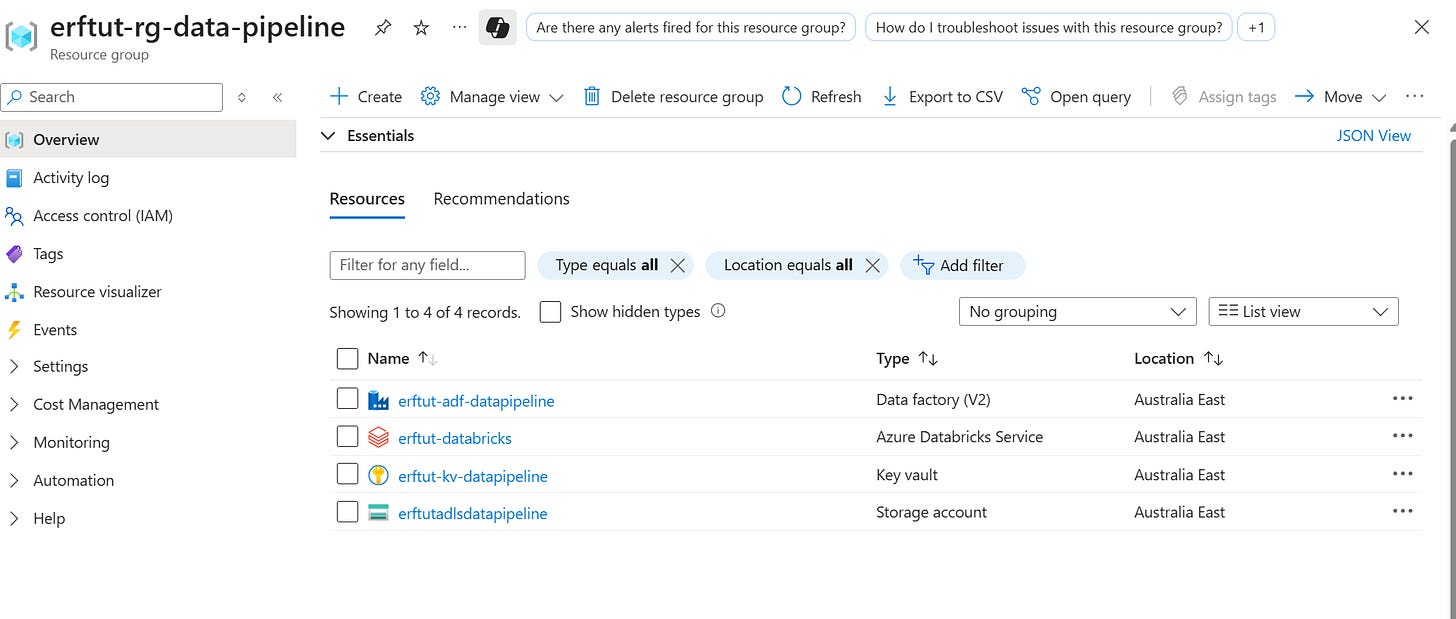

This is a simple hands-on example to create :

Azure Resource Group

Azure Key Vault

Azure Data Lake Storage Gen2

Storage containers (Bronze, Silver, Gold)

Azure Data Factory

Azure Databricks

Note: You should have a basic understanding of Azure and have an active Azure account. If you don’t, you can start by checking out this Azure training17.

The implementation repository is available here18.

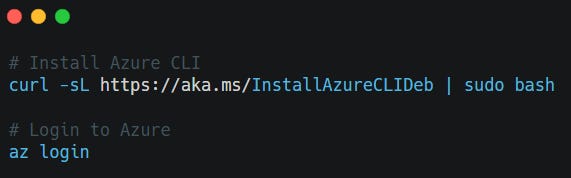

Step 1: Set up Environment

To install Terraform, we can use Linux, macOS, or Windows. For more details, check the official installation guide19.

The instructions are straightforward and walk us through installing Terraform using our terminal.

Since we’re using Azure as our provider, we’ll also install the Azure CLI (in this example, on Ubuntu).

Step 2: Clone and Navigate

Let’s clone the repo and navigate to the Terraform folder.

Step 3: Define the infrastructure

We created two .tf files:

main.tf: Lists exactly what Azure resources we want to create.providers.tf: Tells Terraform:Which tools (providers) to use:

azurerm: Create and manage resources in Microsoft Azure.

Where to get them:

hashicorp/azurermandhashicorp/random.Which version to use:

~>3.0means “use version 3.x but not 4.x”.

Don’t worry if you’re not familiar with the syntax, all templates and examples are available in the Terraform documentation20.

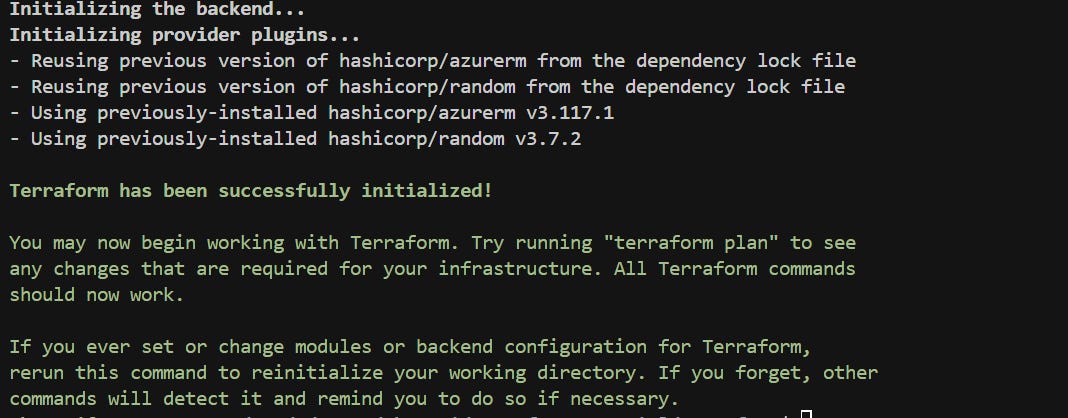

Step 4: Plan Deployment

Now, let’s run

terraform plan

it:

Look at what we already have.

Compares it with what’s in our Terraform files.

Shows exactly what changes it will make (add, change, or delete), but doesn’t actually do them yet.

Step 5: Deploy Infrastructure

Now let’s run:

terraform apply

It runs the plan and creates the resources on Azure.

Step 6: Clean Up (When Done)

Once we're done, we can easily delete all the resources we create by running:

terraform destroy

Amazing! We just learned how to create our resources on Azure using Terraform.

Conclusion

Infrastructure as Code transforms the way data engineers manage cloud resources by applying software engineering principles to infrastructure. With tools like Terraform, teams gain speed, reliability, and repeatability while reducing manual errors and simplifying collaboration. By adopting IaC, we enable scalable, version-controlled, and automated infrastructure deployments, freeing our team to focus on building better data solutions rather than firefighting. In fast-paced environments, mastering IaC isn’t just a nice-to-have; it’s essential for sustainable growth and operational excellence.

If you’re looking to break into data engineering or preparing for data engineering interviews, you might find this Data Engineering Interview Guide series21 helpful.

Want to take your SQL skills to the next level? Check out our SQL Optimisation series22.

We value your feedback

If you have any feedback, suggestions, or additional topics you’d like us to cover, please share them with us. We’d love to hear from you!

https://www.amazon.com.au/Terraform-Running-Writing-Infrastructure-Code/dp/1098116747

https://www.linkedin.com/in/jbrikman/

https://www.freecodecamp.org/news/bash-scripting-tutorial-linux-shell-script-and-command-line-for-beginners/

https://www.docker.com/

https://kubernetes.io/

https://docs.docker.com/reference/cli/docker/swarm/

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/Welcome.html

https://aws.amazon.com/cloudformation/

https://www.pulumi.com/

https://www.hashicorp.com/en

https://go.dev/

https://www.openstack.org/

https://www.vmware.com/

https://developer.hashicorp.com/terraform/language/syntax/configuration

https://opentofu.org/

https://developer.hashicorp.com/terraform/intro/terraform-editions

https://learn.microsoft.com/en-us/training/courses/az-900t00

https://github.com/pipelinetoinsights/terrafom_simple_tutorial

https://developer.hashicorp.com/terraform/tutorials/aws-get-started/install-cli

https://developer.hashicorp.com/terraform/tutorials/azure-get-started

https://pipeline2insights.substack.com/t/interview-preperation

https://pipeline2insights.substack.com/t/sql-optimisation

💯, must for DE now especially in small to medium sized companies.

Here is a real world example for DEs using Terraform:

https://www.junaideffendi.com/p/deploying-spark-streaming-with-delta