Introduction to data load tool (dlt): A Python Library for Simple Data Ingestion

Discover the basics of dlt and its role in modern data engineering workflows

Are you struggling to handle the complexity of ingesting data from multiple sources into various destinations? Or are you tired of the non-user-friendly import data features of different systems?

Say hello to dlt, a Python library that simplifies data ingestion and integration. It’s designed to save you time and effort so you can focus on what really matters.

In this series of posts, we’ll introduce you to dlt, guide you through getting started, and, if you find it helpful, inspire you to add it to your skill set. Give it a try on your projects, whether for your team or personal use, and share your experiences with us if you enjoy it!🙂

Before we dive into dlt, let’s start with the basics: data engineering, data ingestion, and everything that comes with it. By understanding these core concepts, you'll see how dlt fits seamlessly into the bigger picture and makes your data pipeline smoother. Let’s take it step by step!

Data Engineering and Data Ingestion

As a Data Engineer, your primary role is ingesting raw data, transforming it into meaningful insights, and making it ready for downstream use cases like analysis, reporting, or machine learning.

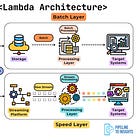

When it comes to data ingestion, there are two main approaches: Batch and Streaming. These methods can also complement each other. For example, machine learning models are typically trained on batch data, but the same data might first be ingested via streaming to enable real-time use cases like anomaly detection.

If you want to learn more, please check our previous post here:

ETL vs ELT

When thinking about general data pipelines, ETL and ELT are two common patterns for integrating and processing data workflows.

ETL (Extract, Transform, Load)

Process: Extract data → Transform it → Load it into the target system.

Key Feature: Data is cleaned and prepared before being loaded.

Use Case: Often used in traditional systems like data warehouses, where structured and well-prepared data is essential.

Challenge: Slow extraction times due to source system limitations.

ELT (Extract, Load, Transform)

Process: Extract data → Load it into the system → Transform it later.

Key Feature: Raw data is loaded first, with transformations performed in the target system.

Use Case: Widely adopted in modern architectures like data lakes and high-performance warehouses.

Challenge: If raw data is left untransformed, it can lead to a "data swamp", an unusable mess of unorganised data.

Key Difference Between ETL and ELT

The main distinction is when the data transformation happens.

ETL transforms data upfront, ensuring only clean and structured data enters the system.

ELT defers transformation, allowing flexibility in the target system but requiring careful governance to avoid creating a data swamp.

In practice, modern tools often blend these approaches, depending on specific project needs, making it easier to adapt to different workflows and business requirements.

What is dlt?

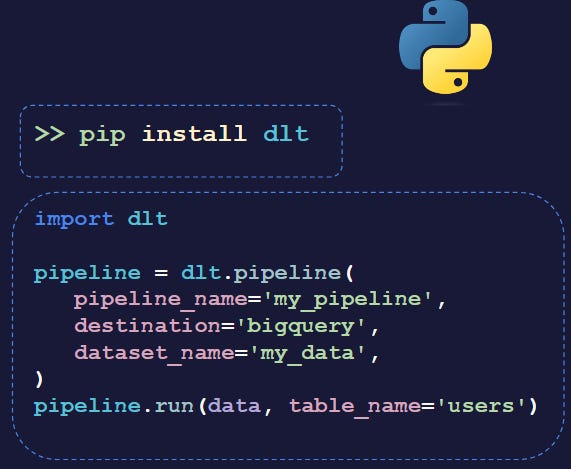

dlt is an open-source Python library designed to make ELT processes simpler and more efficient. It handles tasks like schema creation, data normalisation, and incremental loading. Just as dbt empowers SQL users to handle the Transform (T) layer of ELT, dlt empowers Python users to manage the Extract (E) and Load (L) layers with ease.

With dlt, you can seamlessly load data from a variety of sources into different destinations. Whether you’re working with REST APIs, SQL databases, Cloud Storage, or Python data structures, dlt provides a straightforward and flexible interface for integration.

History of dlt

dlt: Adrian, the co-founder of dlt, came up with the idea in 2017. After a decade as a freelance data engineer, he realised that many of his projects were similar, yet he had to recreate solutions from scratch each time. This led him to wonder “What if there was a way to use reusable code?”. That question sparked the idea for dlt.

dlthub ( the company behind dlt and its ecosystem) based in Berlin and New York City, was founded by machine learning and data veterans. Backed by Foundation Capital, Dig Ventures, and technical pioneers from renowned companies like Hugging Face, Rasa, Instana, Miro, and Matillion, we are driven to innovate and empower the data community.

dlt+ provides workshops, migration services, and support for data teams who would love assistance with their data platform.

Why dlt

1. It’s a Pythonic and user-friendly library

Easy installation and set-up (is a pip-installable, minimalistic library, free and Apache 2.0 Licensed).

Easy to use with a shallow learning curve and a declarative interface.

It’s Pythonic, so you don’t have to learn new frameworks or programming languages.

It's just a library that runs anywhere Python runs.

It has seamless Integration with Colab, AWS Lambda, Airflow, and local environments.

Powerful CLI for managing, deploying and inspecting local pipelines.

It adapts to growing data needs in production.

2. It supports DE best practices:

dlt contains all DE best practices. Anyone who ever worked with Python can create the pipeline on a Senior level:

a) Schema Evolution:

With dlt, schema evolution is handled automatically. When modifications occur in the source data’s schema, dlt detects these changes and updates the schema accordingly.

b) Incremental Loading:

Incremental loading adds only new or updated data, saving time and cost by skipping already loaded records.

dlt supports all types of write Options:

Full Load: Replace all data

Append: Add new data

Merge: Combine or update data with a key

c) Data Contracts:

Data contracts in dlt ensure schema consistency by defining rules for how tables, columns, and data types are handled during data ingestion. You can control schema evolution, prevent unexpected changes, and validate data using modes like evolve, freeze, or discard. These contracts maintain data integrity while allowing flexibility where needed.

d) Performance Management:

dlt provides several mechanisms and configuration options to manage performance and scale up pipelines:

Parallel execution: extraction, normalization, and load processes in parallel.

Thread pools and async execution: sources and resources that are run in parallel.

Memory buffers, intermediary file sizes, and compression options.

How does dlt work?

A basic dlt pipeline has 3 steps :

dlt Case Studies

Several companies have already started using dlt. Here are two examples:

For more case studies, please visit https://dlthub.com/case-studies

How to deploy dlt pipelines

dlt can be deployed on cloud-based job schedulers such as AWS Lambda, Azure Functions, or Google Cloud Functions. It can also be containerised with Docker and orchestrated using platforms like Kubernetes or AWS ECS for scalable and efficient execution. Additionally, pipelines can be automated using tools like Airflow or Dagster, with automated deployment managed via CI/CD tools like GitHub Actions. In the coming weeks, we’ll explore these options in detail.

Let’s see dlt in action

In this example, we will load data from a public MySQL database into DuckDB, an in-process SQL OLAP database management system.

Prerequisites:

Python 3.9 or higher

Virtual environment set up

dlt installed

Steps:

Create a dlt Project

Initialise a new project with:This creates the necessary files and folders for your SQL Database to the DuckDB pipeline.

Files created:

.dlt/(contains configuration files)config.toml:This file contains the configuration settings for your dlt project.secrets.toml:This file stores your credentials, API keys, tokens, and other sensitive information.sql_database_pipeline.py(main script for the pipeline)requirements.txt(lists Python dependencies)

Configure the Pipeline Script

Write the pipeline script to load tables, such asfamilyandgenome. Example code:

Add Credentials

Provide your SQL database credentials insecrets.toml:

Install Dependencies

a) Install required dependencies:b) Install database-specific dependencies, such as

pymysql:

Run the Pipeline

Execute the pipeline:This will load the data into a DuckDB file.

Explore the Data

Install Streamlit and run the browser app to explore the loaded data:

Resources to learn

Source code: the open-source dlt library

dltHub Documentation: Comprehensive guides and references for using the dlt library.

Slack: A highly responsive community for support and discussions.

Substack: dltHub's newsletter for staying updated on library features and announcements.

LinkedIn: News, updates, meetup announcements, and more.

YouTube: Learning resources, workshop recordings, and meetup videos.

Summary

Whether you’re dealing with the challenges of building and maintaining data pipelines, integrating Python tools into enterprise systems, or scaling workflows efficiently, dlt simplifies the process. It reduces reliance on specialized roles and bridges the gap between modern data needs and Python-friendly solutions. Try dlt today and transform the way you tackle data challenges—simpler, smarter, and more collaborative!

If you found this post valuable and want to stay updated on the latest insights in data engineering, consider subscribing to our newsletter.

In the upcoming posts, we’ll dive deeper into dlt with practical insights, exploring concepts such as sources, resources, integrations between various sources and destinations, incremental loading, and more. If you’re eager to get started before then, the dlt documentation is an excellent resource, followed by the dlt YouTube channel for further learning.

If you’re looking to break into data engineering or preparing for data engineering interviews, you might find this Data Engineering Interview Guide series7 helpful.

Want to take your SQL skills to the next level? Check out our SQL Optimisation series8

This is amazing content