Week 22/34: Batch Processing for Data Engineering Interviews

Understanding Batch, Micro-Batch, and Near Real-Time Processing in Data Engineering

In Data Engineering, there are two main ways to handle data: Batch Processing and Stream Processing. Both are useful, but they are used for different types of problems.

Between them, micro-batching and near real-time processing offer middle-ground solutions, balancing speed, cost, and complexity based on business needs.

Most companies still use batch processing for their data. Over 80% of data work is still done this way1. Batch is popular because it’s simple, stable, and easy to manage. If a business needs faster updates, it can run its batch jobs more often, like once an hour instead of once a day.

However, as more businesses demand faster, real-time insights, it's important for data engineers to understand how stream processing works. Even if you don’t use it right away, knowing it helps you build better systems in the future.

If you're new to batch and streaming data, we recommend exploring “Data Ingestion: Batch vs. Stream , Which Strategy Is Right for You?2” from our Data Engineering Lifecycle3 series.

If you want to see what's covered in our interview series, please take a look at the Data Engineering Preparation Guide series4 for all the posts.

In this post, we’ll cover:

What is Batch Processing?

What is Micro-Batching?

What is Near Real-Time Processing?

Which method to choose?

Common Interview Questions.

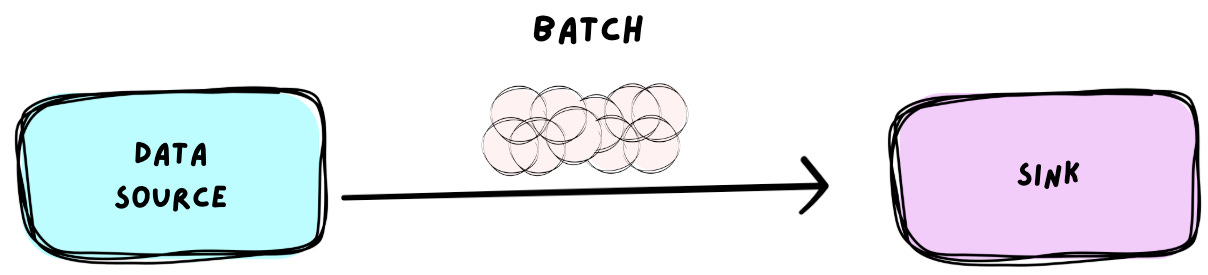

What is Batch Processing?

Batch processing involves collecting data over time and processing it all at once. Rather than reacting to each event as it happens, systems wait until a full "batch" of data is ready and then process it.

Think of it like doing laundry: wait until you have a full load, then wash it all together.

Key Features:

Runs on a fixed schedule (e.g., hourly or daily).

Works well with large datasets.

Easier to debug and manage.

Great for reporting, dashboards, and quality checks.

Common Use Cases

Financial reconciliation: End-of-day balance calculations and report generation.

ETL/ELT pipelines: Loading data warehouses and data marts.

Complex analytics: Operations requiring full dataset scans or historical analysis.

ML model training: Processing complete datasets to train machine learning models.

Log analytics: Aggregating and analysing application logs across systems.

Example:

A bank collects and processes all daily transactions overnight to update customer balances.

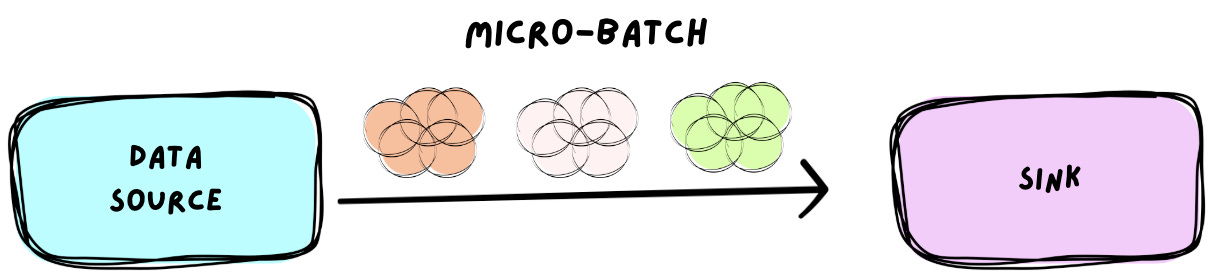

What is Micro-Batching?

Micro-batching bridges the gap between traditional batch and real-time stream processing. Instead of waiting for a full day of data, it processes small chunks at shorter intervals, like every 1 to 5 minutes.

It's like running a mini laundry load every few minutes instead of waiting for the full basket to fill up.

Key Features:

Smaller, time-based chunks (seconds to minutes).

Lower latency than batch, but simpler than full streaming.

Still maintains batch-like control and performance.

Easier to integrate with existing batch systems.

Common Use Cases:

User behaviour analysis: User signups or clickstream processing every few minutes

Incremental data ingestion: Incremental updates to dashboards

IoT data processing: Handling sensor data that tolerates some delay

Example:

A job runs every 10 minutes to process new user signups and update internal metrics.

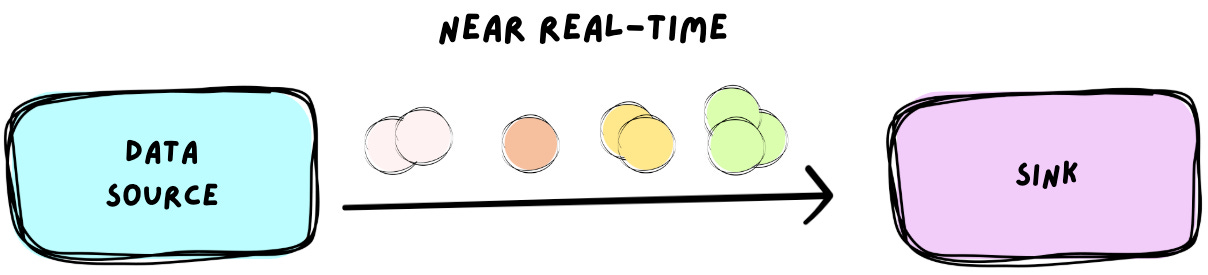

Near Real-Time Processing

Near real-time processing aims to reduce the delay between data creation and data insight, without diving into the full complexity of streaming systems.

Key Features:

Achieves a balance between batch and full streaming by minimising delay without needing a complex streaming architecture.

Data is processed continuously, not on a fixed schedule.

Trades some efficiency for faster insight.

Keeps user-facing insights fresh and responsive without being fully real-time.

Common Use Cases:

Payment fraud detection: Identifying suspicious transactions in real time

IoT monitoring and control: Responding to sensor data with immediate actions

Network security: Detecting and responding to threats as they emerge.

Example:

A logistics company tracks delivery trucks and updates the dashboard every 5 minutes. This gives dispatchers fresh info without needing full streaming—perfect for near real-time.

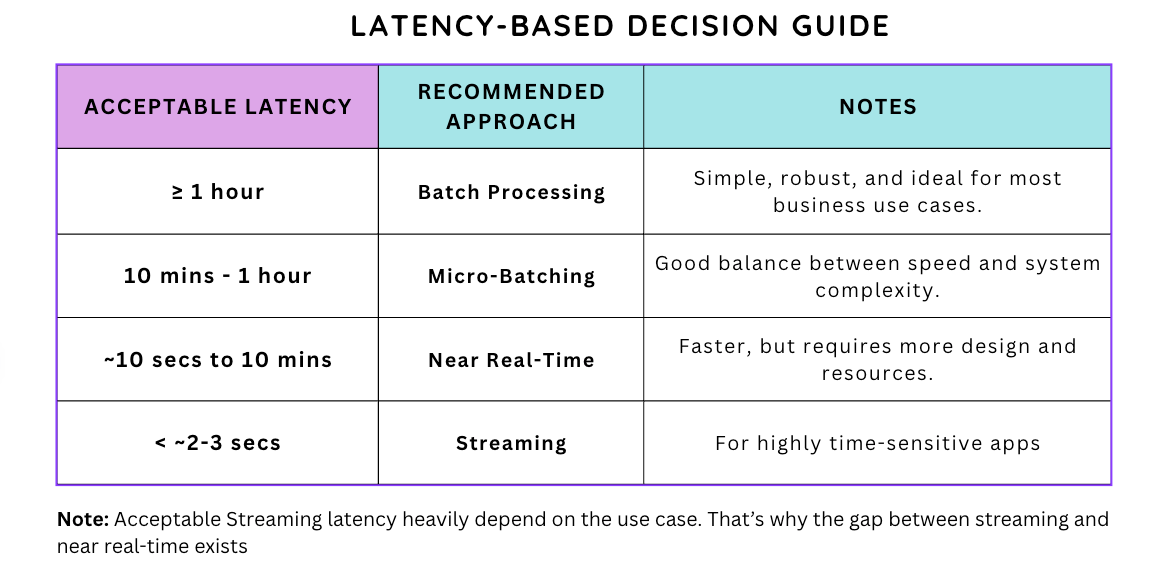

Which method to choose?

When choosing between batch, micro-batch, near real-time, or streaming, a good starting point is to ask:

How fresh does the data really need to be?

Sometimes, stakeholders might say, “We need updates every hour,” but after digging deeper, you may discover that daily updates are actually enough. So, it's important to understand the true business need before making a decision.

Here's a simple guide to help you choose the right data processing strategy based on how quickly data needs to be available:

In the coming week, we’ll dive deeper into how to choose the right data processing approach, what to use, when to use it, and why. We'll also share our experiences and insights from real-world projects. Stay tuned!

Key Interview Questions

In a data engineering interview, you’ll commonly be asked to:

Explain the differences between batch, micro-batch, and streaming processing.

Describe where micro-batching is typically used.

Discuss the pros and cons of each approach.

Interviewers may also present real-world scenarios and ask:

How would you design a data pipeline for a given use case?

Whether you’d choose batch or micro batch, and so on, and why.

They want to assess whether you:

Understand important trade-offs such as latency, cost, and complexity.

Have practical experience implementing these methods in real projects.

Note: Before jumping into a solution, always take time to understand the full scope of the problem.

Try to:

Gather as many requirements and constraints as possible from stakeholders.

Clarify the desired latency, data volume, and data sources.

Ask about available tools, infrastructure, and budget.

Consider factors like data quality, schema evolution, and retention policies.

This helps ensure your solution is not only technically sound but also practical and aligned with business needs.

If you're still getting used to thinking like a data engineer, this short post might help clarify the mindset and approach required:

Note: We have previously implemented both ETL and ELT pipelines using batch and micro-batch processing, which are available in our repository. In upcoming posts, we will be sharing additional implementations focusing on near real-time and streaming solutions.

Q1:

Scenario:

We receive daily CSV files from 50 regional stores uploaded into cloud storage (e.g., S3, Azure Blob). The business needs an aggregated sales report once per day, showing total sales per region.

Expected Candidate Follow-up Questions:

Before proposing a solution, the candidate should ask clarifying questions such as:

What time are the files typically available each day?

Is daily insight sufficient, or is there a need for fresher data (e.g., hourly or near real-time)?

Are the file formats and schemas consistent across all stores? If not, how do we handle schema evolution?

What are the data quality expectations? Do we need to handle late or corrupt files?

Are there any downstream systems depending on this report?

What tools and compute environment are already available (e.g., Spark on EMR, Databricks, etc.)?

Answer

Since data arrives once per day and the business only requires a daily aggregated report, batch processing is the most suitable solution. It is cost-effective, simpler to orchestrate, and well-aligned with the reporting frequency.

Q2:

Scenario: You're working with a manufacturing company that has installed sensors throughout its production facilities. After discussions with stakeholders, you've identified these requirements:

Equipment health monitoring with alerts for potential failures is updated every 15 minutes.

Daily production efficiency reports for management.

Quality control metrics are refreshed hourly for floor supervisors.

Energy consumption patterns are analysed monthly for optimisation.

Design a data processing architecture using appropriate batch, micro-batch, or near real-time approaches for each requirement. Explain your choices and their trade-offs in terms of cost, complexity, and business value. For the equipment health monitoring component, provide a detailed Spark implementation showing how you would structure the micro-batch or near real-time processing.

Answer:

Based on the manufacturing company's requirements, I'll design an appropriate processing architecture:

Equipment Health Monitoring: Near Real-time Processing (15-minute intervals)

Near real-time processing with 5-minute micro-batches allows for quick detection.

Strikes a balance between timeliness for maintenance activities and reasonable system overhead

Provides a 15-minute response window to prevent equipment damage and downtime

Production Efficiency Reports: Batch Processing (Daily)

Classic batch processing case for consolidated daily metrics.

Allows for computationally intensive calculations across full production data.

Scheduled overnight when systems have less load.

Quality Control Metrics: Micro-batch Processing (Hourly)

The hourly refresh rate is adequate for supervisor intervention.

Enables more complex quality analysis than a real-time approach.

Stable metrics that don't fluctuate minute to minute.

Energy Consumption Analysis: Batch Processing (Monthly)

Long-term trend identification benefits from full batch processing

Resource-intensive analytics are better suited to less frequent schedule

Focus on patterns rather than immediate action items.

Q3: What are some challenges you've faced with batch data pipelines?

Answer:

In my experience, the most significant challenges with batch pipelines include:

Data arrival dependencies:

Late-arriving data disrupts processing schedules.

Handling upstream system failures.

Implementing wait conditions and timeout mechanisms.

Processing window constraints:

Ensuring jobs are completed within their allocated window.

Managing gradually increasing data volumes within fixed windows.

Dealing with unexpected processing spikes.

Data quality issues:

Implementing robust validation without excessive overhead.

Balancing between failing fast vs. partial processing.

Creating actionable alerts for data quality problems.

Idempotency and reprocessing:

Designing pipelines that can safely rerun without duplications.

Implementing checkpointing for efficient restarts.

Managing state during partial failures.

Resource contention:

Handling multiple concurrent batch jobs competing for resources.

Prioritising critical vs. non-critical processing.

Implementing fair scheduling policies.

Monitoring and observability:

Building comprehensive SLI/SLO tracking.

Creating meaningful alerting thresholds.

Establishing clear data lineage for troubleshooting.

The most effective solutions typically involve implementing proper partitioning strategies, robust error handling with automatic retries, comprehensive monitoring, and clear documentation of dependencies between different batch processes.

Q4: What are the trade-offs between batch and micro-batch processing in terms of cost, scalability, and complexity?

Answer:

Batch processing is generally more cost-effective and simpler to manage. It runs less frequently, which reduces compute costs and is easier to develop, test, and monitor. It’s ideal when data freshness isn’t critical.

Micro-batching offers lower latency by processing data in smaller intervals (e.g., every few minutes), but it introduces higher costs due to more frequent compute usage. It’s also slightly more complex, requiring careful scheduling, monitoring, and handling of late-arriving data.

In terms of scalability, both approaches can scale well, but micro-batching may need more tuning as data volume and frequency increase.

Conclusion

Batch processing remains a cornerstone of data engineering despite the growing popularity of real-time solutions. Understanding when and how to implement batch processing, whether traditional, micro-batch, or near real-time approaches, is essential for building efficient, scalable data pipelines.

The key is choosing the right processing pattern based on your specific business requirements, considering factors like data volume, freshness requirements, available resources, and system complexity. Many modern data architectures incorporate a combination of approaches, using batch processing for resource-intensive operations while leveraging streaming for time-sensitive workloads.

By mastering these concepts, you'll be well-prepared for both technical interviews and real-world data engineering challenges.

If you're interested in learning about 10 Pipeline Design Patterns for Data Engineers:

If you want to learn how to design and implement Small-Scale Data Pipelines:

And if you’re looking to get started with Apache Spark, please check out this post:

We Value Your Feedback

If you have any feedback, suggestions, or additional topics you’d like us to cover, please share them with us. We’d love to hear from you!

https://dataengineeringcentral.substack.com/p/they-said-streaming-would-overtake

https://pipeline2insights.substack.com/p/data-ingestion-batch-vs-stream-processing

https://pipeline2insights.substack.com/t/data-engineering-life-cycle

https://pipeline2insights.substack.com/t/interview-preperation